Artificial Intelligence

Many news consumers are uneasy about AI, and they bring that discomfort—and often distrust—into how they view the use of AI in journalism. New Trusting News research confirms that reality: Audiences are skeptical, cautious and, in some cases, firmly opposed to AI being used in news content.

So, how can journalists be transparent about their use of AI in a way that doesn’t lose — and maybe even builds — audience trust?

Our research and newsroom experimentation offer both caution and opportunity. Most people want AI use disclosed, but disclosure alone doesn’t guarantee trust. In fact, some news consumers expressed less trust after learning that AI had been used, even when the AI use disclosure included details they said they wanted, including the involvement of human oversight and commitment to accuracy and ethics.

Based on new and previous research, Trusting News is recommending journalists take these steps, which we walk you through in this Trust Kit.

Journalists should disclose their use of AI and find ways to explain why AI was used and how humans fact-checked for accuracy and adhered

to ethical standards.

Quick AI resources

In this Trust Kit, you’ll find resources to help you:

- Listen. A survey to learn how your community feels about AI and your use of it. A community interview guide to dive deeper into those feelings. Questions to ask to get regular community feedback on your use of AI.

- Be transparent. A worksheet to create an AI use policy. A worksheet to create in-story AI use disclosures. Sample language to copy/paste into AI use disclosures and policies. Newsroom examples of AI policies, AI use disclosures and other AI transparency initiatives.

- Be ethical. A resource to help spot and disclose AI in content you don’t control. A collection of research to help you understand how the public feels about AI.

Goals

This Trust Kit helps you:

- Learn how your audience feels about journalists using AI and what they expect from you when you do use it.

- Be transparent about your use of AI by creating public-facing AI policies, in-story disclosures and other transparency elements.

- Consider how to educate your audience about AI and demonstrate responsible and ethical AI use.

How to engage

According to the 2025 Edelman Trust Barometer, less than a third (32%) of Americans say they trust AI.

This context is important as journalists and the news industry decide how to move forward. Ultimately, there is a lot that AI and technology can do to help make news coverage more useful and accessible to audiences. But newsroom decisions should be made based on a deep understanding of how the public perceives the emerging technology, and news organizations must be considerate of how significant portions of their own audiences intensely distrust any use of these technologies.

By actively involving the audience in discussions about the use of AI in news production, journalists demonstrate a commitment to transparency and responsiveness. This approach allows journalists to understand community concerns, preferences and expectations regarding AI, leading to more tailored and relevant reporting.

It also allows for newsroom transparency efforts to be based on a real understanding of audience needs and concerns. It’s hard to build trust without first understanding possible mistrust.

In addition, soliciting feedback and insights from the community can foster a sense of partnership and co-creation, empowering the community to actively participate in shaping the future of journalism and creating stronger relationships.

Newsroom case study: ARLnow

One example of a newsroom listening comes from ARLnow. In this example, we see a newsroom change course after hearing feedback from its audience. The newsroom was sometimes using AI to create images when they did not have actual photos to fit with a story.

The newsroom received feedback from their audience expressing discomfort with this practice, so the newsroom decided to ask the audience if they were comfortable with AI being used in their news coverage this way. While a majority said they were, 48% said they were not, so the newsroom said it would stop this practice and instead, commission “human-created illustrations for the real estate and local business stories for which AI images were previously used.” While sharing this news they also shared the 10 ways they are using AI in their news process.

So, how can you listen?

You should base your decisions about how to talk about your AI work on what will be helpful to the specific community you serve. What do they understand? What do they fear? What are they looking for?

To help newsrooms engage and listen to their audiences about AI, Trusting News has developed four tools:

Survey: “Using Artificial Intelligence in our journalism”

Trusting News created this survey for newsrooms to use, drawing on previous research and journalist feedback about transparency and disclosure. The goal was to better understand news consumers’ comfort levels with how journalists use AI and to gather insights on what should be included in disclosures. The survey aimed to address the current needs of newsrooms by focusing on how AI is being used or how newsrooms are considering using it in reporting rather than exploring its full range of potential uses.

Trusting News is making the full survey available so other newsrooms can replicate it in their own communities. Click here to make a copy of our survey for your own use.

Some of the questions from the survey include:

- How specific should explanations about our newsroom’s use of AI be?

- Is knowing why we decided to use AI in our reporting process important to you?

- Would it be useful if our newsroom provided information and tips to help you better understand AI in general and detect when content has involved the use of AI?

When Trusting News asked newsrooms to use the survey in a cohort, most of the newsrooms used the provided Google form to collect data, while a few opted for platforms like SurveyMonkey. To share the survey with their audiences, newsrooms shared the survey link within stories, newsletters and social media.

View examples here of how different newsrooms distributed the survey.

AI Community Interview Guide

To gather deeper insights into perceptions of AI use and understanding of the technology, newsrooms can conduct one-on-one interviews with community members.

Research shows journalists simply taking the time to talk and listen to people builds trust and goodwill. In one Trusting News listening project, 86% of community members interviewed said they felt a sense of trust building with the reporter or the news organization after a conversation with a journalist about news consumption, and 28% said they were considering subscribing to that news outlet. The journalists said it helped them better understand how to serve their audiences.

Think about the impact this could have if newsrooms incorporated this practice into their larger efforts. That’s true in general, and it’s true for specific topics like the use of AI.

Building on this, Trusting News created an “AI Community Interview Guide” for journalists to use in their own communities. Find the full the guide here.

Some of the questions in the guide include:

- Is there anything you’re afraid of when it comes to us using AI?

- When we use AI, what do you want to know about that use?

- When thinking about a new organization’s use of AI, how important is it to you that a human was still involved in the process or looked at the content before it was published?

Continually invite feedback

This is a quickly evolving landscape. We should expect both newsroom technology use and public perceptions to continually shift.

In addition to the survey and community interview guide, we also recommend regularly checking in with your audience about how they feel about your AI use, along with your transparency about that use.

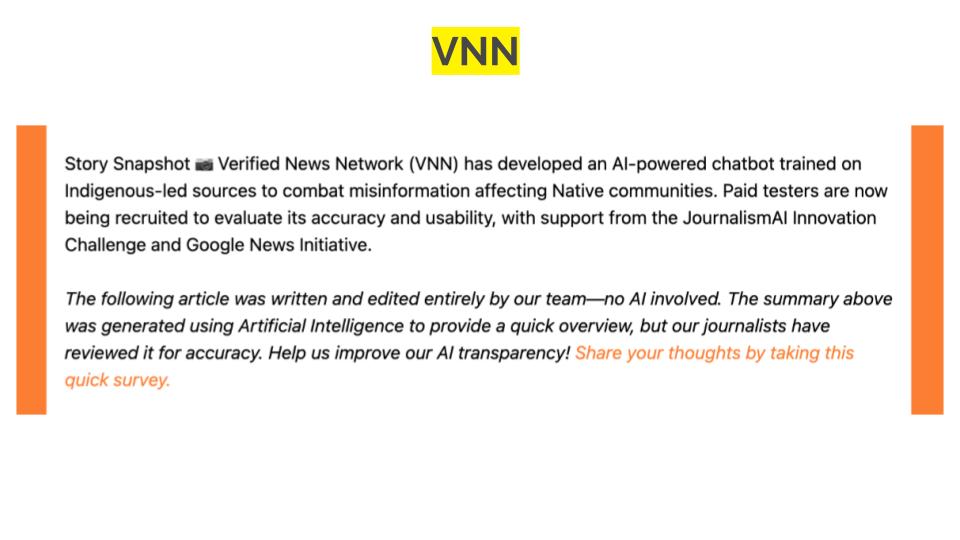

One way to invite ongoing feedback is to use an in-story survey. This can be done by linking out to a survey on another page or by embedding a survey within or underneath a story. Here’s an example from Verified News Network:

“The following article was written and edited entirely by our team—no AI involved. The summary above was generated using Artificial Intelligence to provide a quick overview, but our journalists have reviewed it for accuracy. Help us improve our AI transparency! Share your thoughts by taking this quick survey.”

To help you regularly ask for feedback, we have suggested language and sample questions in this Trusting News AI Resource: Use these questions to see what your news consumers think about AI.

A Scale: How the public feels about specific uses of AI in journalism

Trusting News has been working with journalists and researchers to understand news consumers’ comfort levels with how journalists use AI.

We have learned people are worried about AI’s ability to contribute to more misinformation and make it harder for them to decipher what is true and what is false. These feelings of fear and concern have led almost a third of the news consumers we surveyed to say journalists should never use AI in their journalism.

Of course, not everyone realizes that simple tools like autocorrect are AI-based, and most people are comfortable with that kind of use. Here’s a look at public perceptions of a range of uses of AI in journalism. Use this scale to guide newsroom decisions, disclosures and audience conversations around AI and its use in your newsroom.

GREEN — Acceptable

Audiences generally find these applications of AI acceptable.

- Checking spelling/grammar

- Transcribing interviews

- Language translation (with human review)

YELLOW — Acceptable with guardrails

Audiences are mixed, with many needing more information and explanation

- AI-assisted data analysis with human review

- AI-assisted headlines or social posts with human review

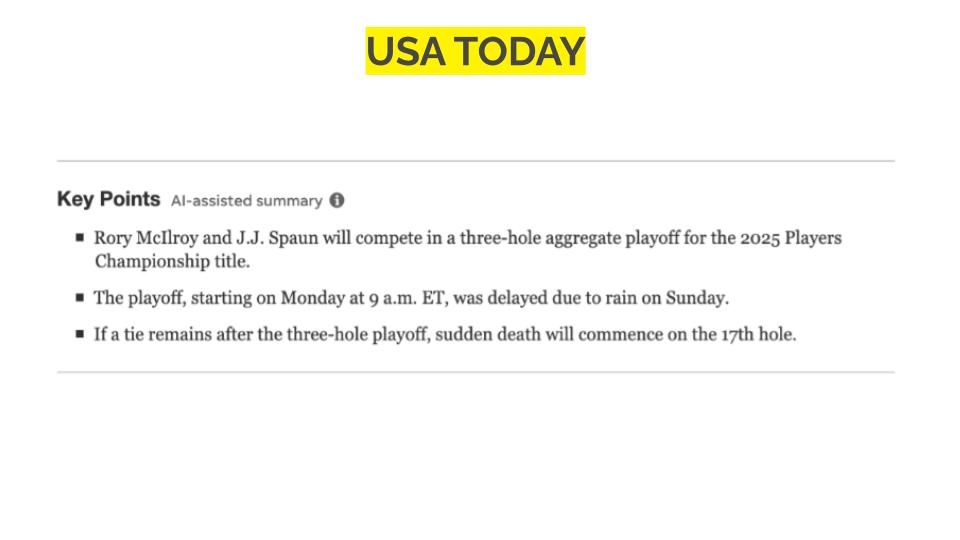

- AI-assisted story summaries with human review

RED — Not acceptable

Most people are uncomfortable with these uses or need a lot more information before accepting them

- Writing stories with human review

- AI-generated language translation without human review

- Writing stories without human review

- Writing headlines/social without human review

- AI-generated news anchors as stand-ins for real journalists

Source: Trusting News research with newsroom partners

How to disclose

News consumers continue to tell newsrooms they want journalists to disclose their use of AI. In one Trusting News cohort, an overwhelming majority (93.8%) of news consumers surveyed said they want the use of AI to be disclosed.

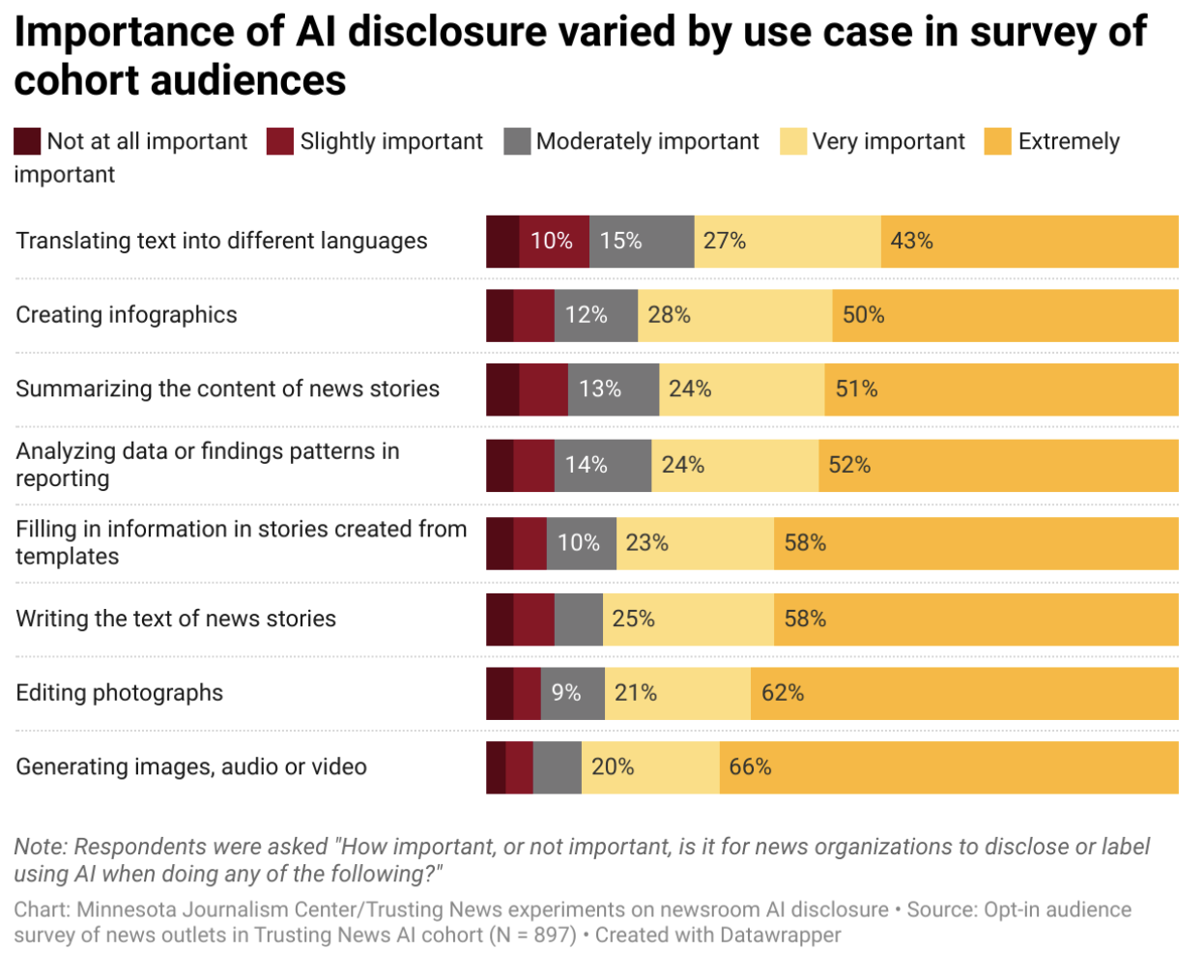

In the latest research, when survey respondents were asked when disclosure of AI use would be important, there was just one use case where more than 50% of respondents did NOT say disclosing that use of AI would be “extremely important.” That use case was translating text into different languages. But even for that use case, 43% of survey respondents still said disclosure would be “extremely important.”

If you are using AI in your newsroom, it’s clear the audience wants to know about it and wants more details than a vague “AI was used in this story” statement. Expand the following boxes for guidance on how to write an AI use disclosure.

(To stay up-to-date on this research, fill out this form and subscribe to our weekly Trust Tips newsletter.)

Create an AI use policy

All newsrooms should get on the record about how they are (or are not) using AI. One way to do that is in an AI use policy. At Trusting News, we believe your AI policy should be a living document that changes as your use of the technology evolves. If your newsroom is wary of publishing new policies, or if the approval process would be cumbersome, consider other ways to get this information to your audience.

An editor’s column or an FAQ can be a less formal way to share your process. Picture a headline like “How our newsroom is exploring AI in our work.” Use language like “we’re asking questions” or “we’re exploring.” Say “we typically” or “we won’t usually” rather than “we always” or “we never.”

That type of transparency models experimentation and evolution and can demonstrate thoughtfulness and care. Don’t let the wait for a formal process get in the way of your public’s understanding of — and trust in — your use of AI. Use this Trusting News AI Worksheet to create an AI use policy based in experimentation and user trust. If you want a more in-depth and formal guide, Poynter has extensive AI ethics guidelines and sample policy language.

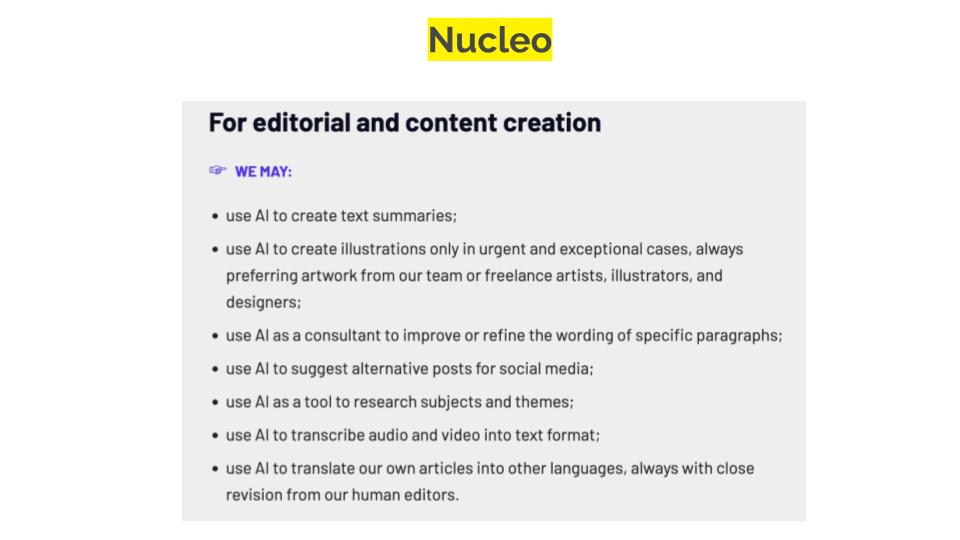

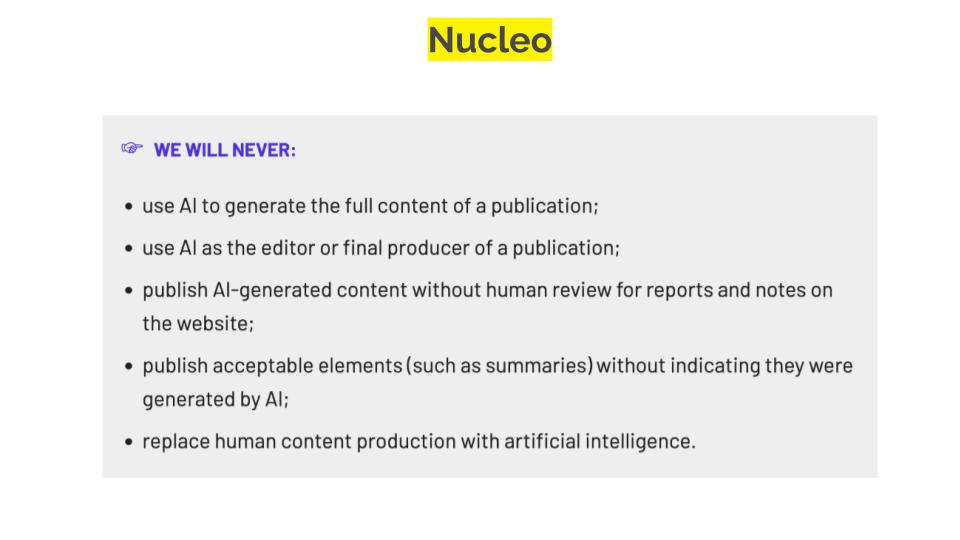

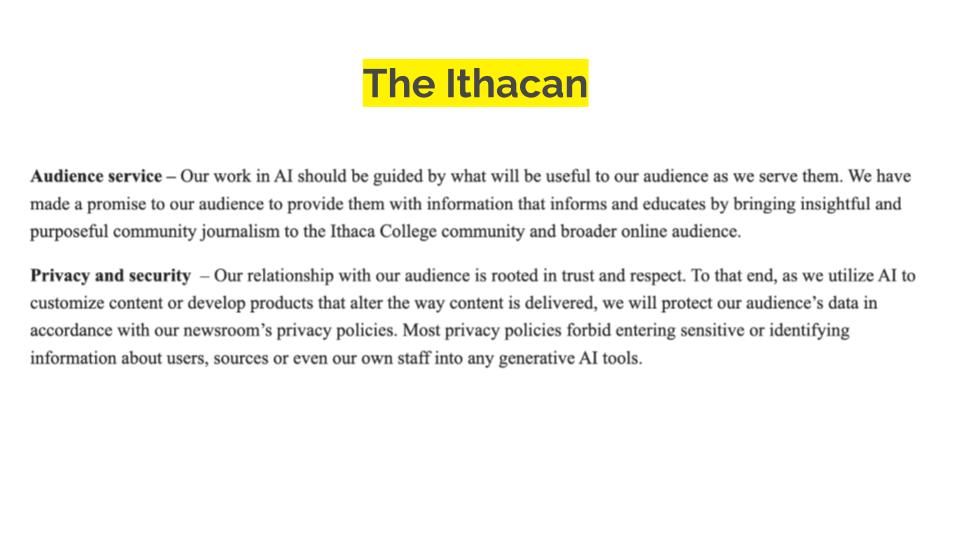

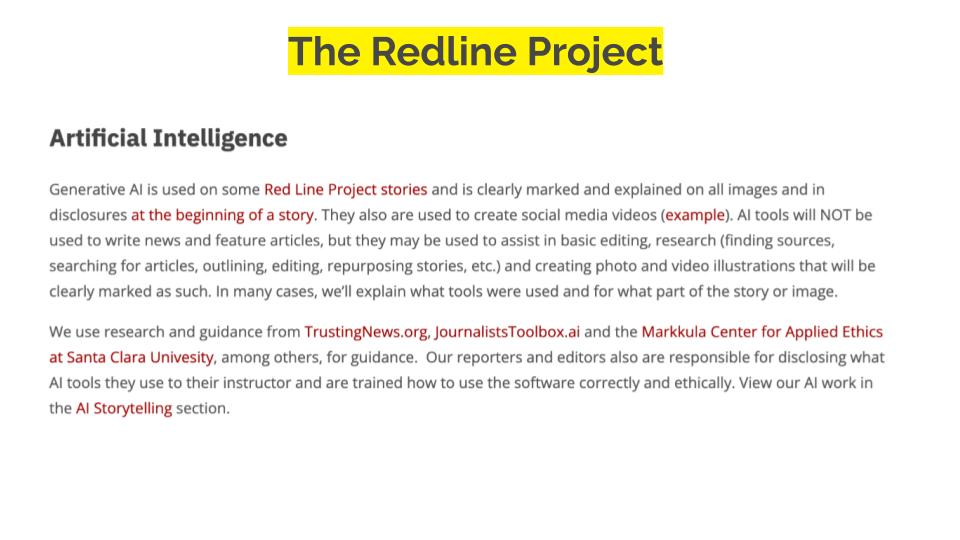

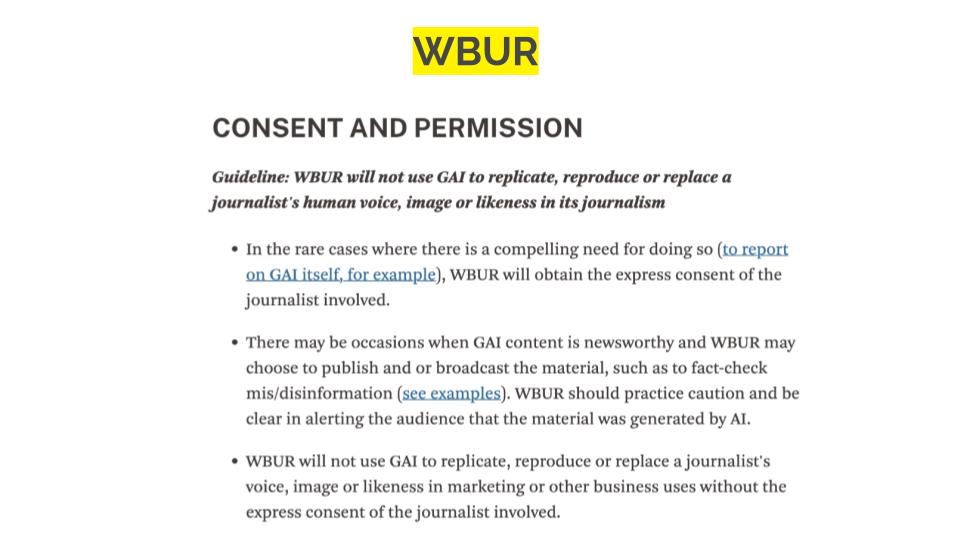

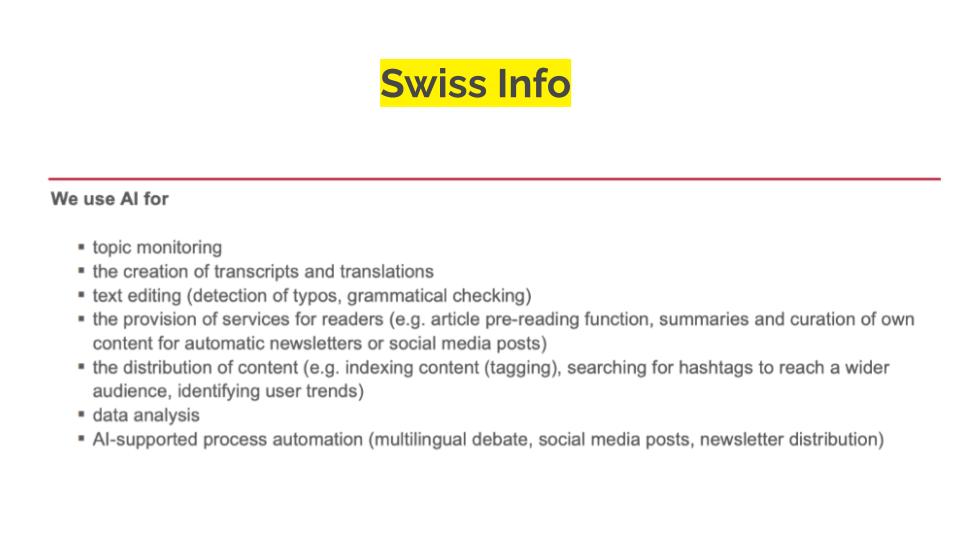

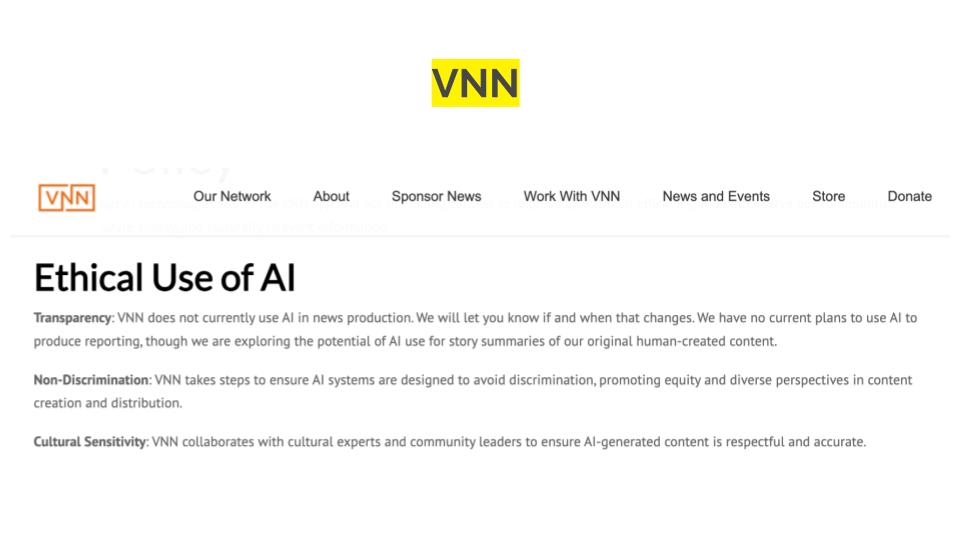

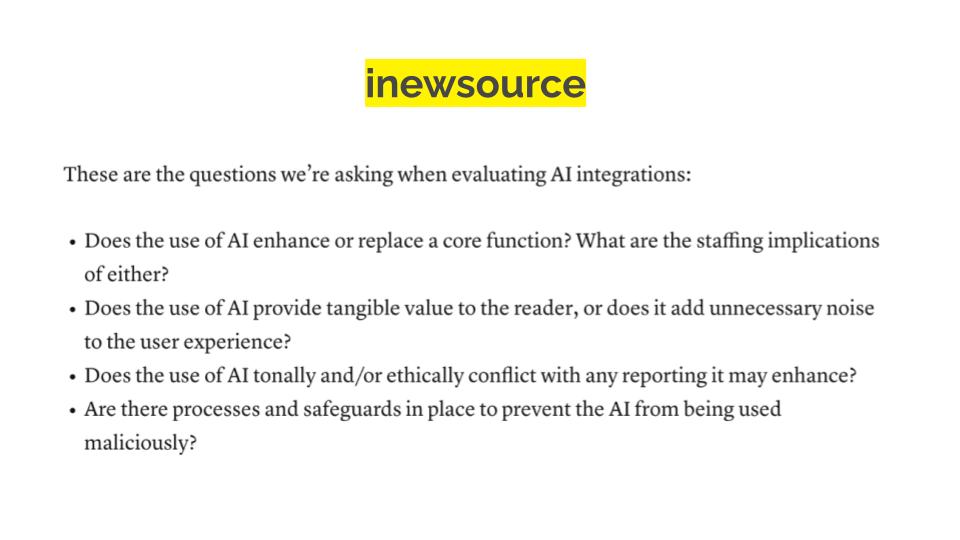

View examples of newsroom AI use policies below and in this example database.

What users say they want to know about AI use

According to data collected by one of our newsroom cohorts, news consumers said the following information would be important to include in a disclosure:

- Why journalists decided to use AI in the reporting process (87.2% said this would be important)

- How journalists will work to be ethical and accurate with their use of AI (94.2% said this would be important)

- Whether and how a human was involved in the process and reviewed content before it was published (91.5% said this would be very important)

Trusting News recently led another newsroom cohort through experiments testing AI use disclosures with this information. In this study, news consumers came across AI use disclosures in real news stories and were asked, among other questions, to share their feelings on the disclosure language, on the general use of AI in news and on how the AI use affected their trust in the content. Our research found:

- AI disclosures often led to decreased trust. People were less likely to trust stories when they saw AI use disclosed, especially in real news stories. Trust dropped even in A/B tests, though reactions varied depending on how the AI use was framed.

- The fact that AI was used mattered more than how it was used. Even detailed explanations, including why AI was used and whether humans were involved, didn’t fully offset the negative reaction to AI use disclosures.

- Audiences want more detail. Respondents consistently asked for more specifics in disclosures, especially about how AI was used, why it was used, and whether humans reviewed the content.

- People still appreciated transparency. Almost half of respondents (47%) reacted positively to the information in the AI labels.

We know this data might cause journalists to resist the idea of disclosing entirely. But because most people say they want AI disclosed in almost every journalistic use case, not disclosing your use of AI would probably lead to more distrust than disclosing it. People don’t want to be tricked or left in the dark. Also, transparency is built into the core of journalism ethics. So instead of hiding our use of AI and hoping people won’t notice, our team firmly believes the way to proceed in this situation is by offering more education and transparency.

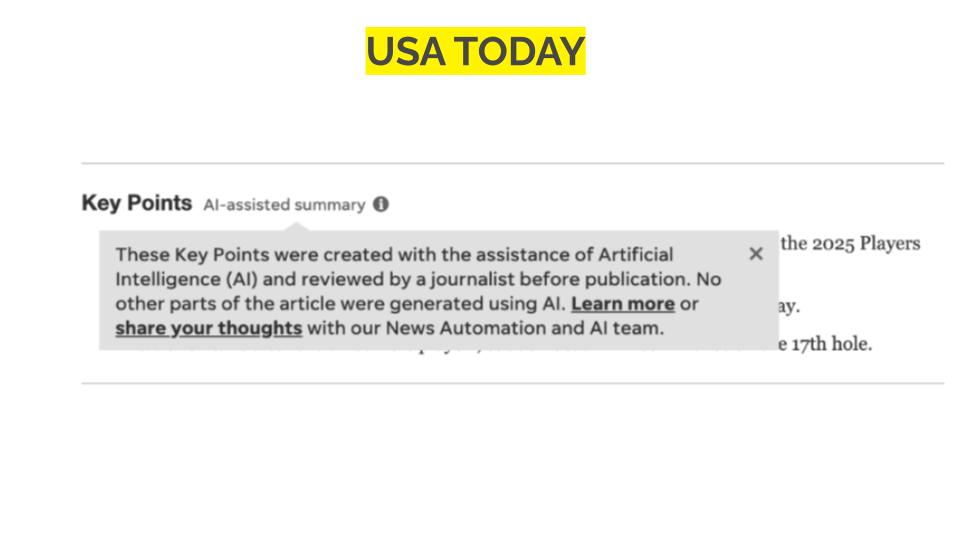

What to include in your AI use disclosures

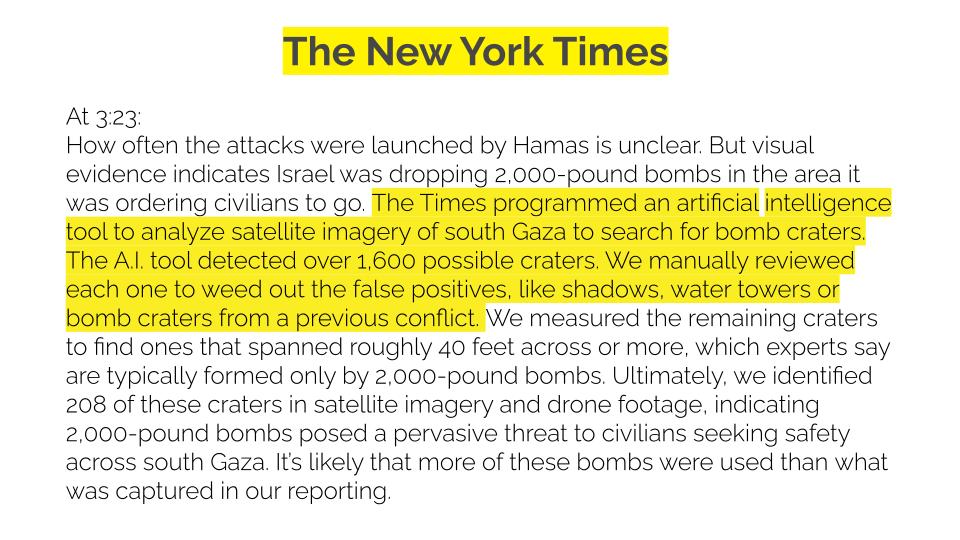

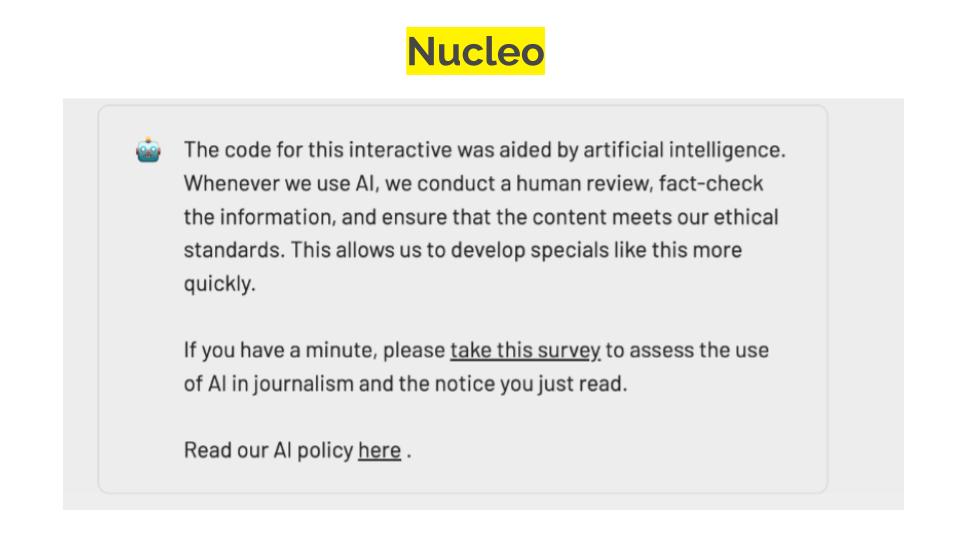

Based on this new research, Trusting News is recommending that AI use disclosures include the following information:

- Information about what the AI tool did

- Explanation about why the journalist used AI, ideally using language that demonstrates how the use of AI benefits the community or improves news coverage

- Description of how humans were involved in the process (assuming this is true)

- Explanation about how content is still ethical, accurate and meets the newsroom’s journalistic standards

- A link to an AI use/ethics policy or similar explanation describing your commitment to accuracy, even when using AI

In addition, Trusting News believes news consumers want more than in-story disclosures. Journalists should consider further explanations with behind-the-scenes explainers and AI literacy initiatives.

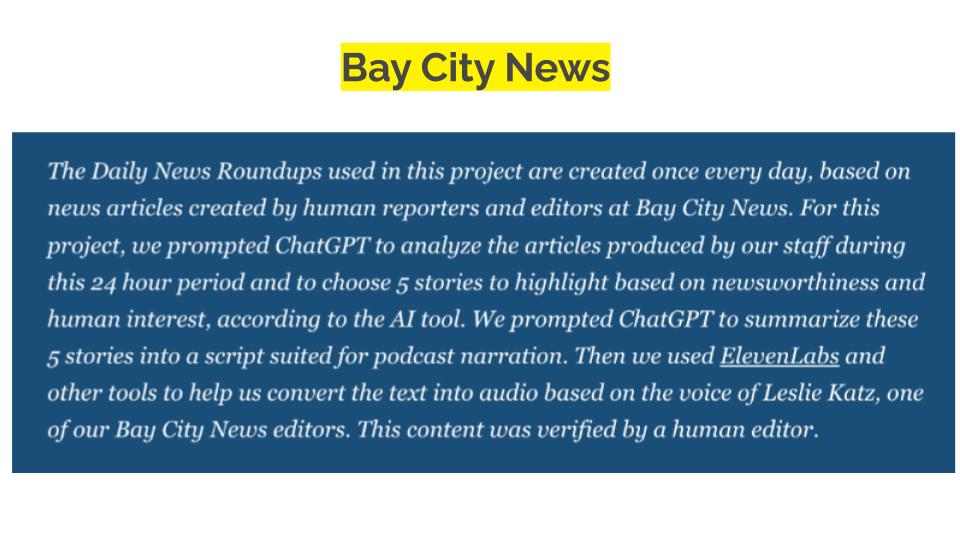

What does this look like? One newsroom leaning into more transparency around the use of AI is Bay City News Foundation. On its “Experiments with AI” page, the newsroom shares updates and details about their AI experimentation with cartoons, podcasts and more so audiences can learn alongside them. The site introduces various test projects, including an AI‑assisted news roundup podcast, while inviting public feedback on each. These experiments aim to demystify AI, reflect on ethical challenges and uphold journalism values like accuracy, trust and community input.

View other examples of more in-depth AI disclosures and transparency stories in this example database.

Trusting News AI Disclosure Template

Since 2016, Trusting News has been developing strategies focused on transparency, including what language works best when explaining reporting goals, mission and process. Based on that work and on our AI research, we recommend that a disclosure about using AI should be written like this:

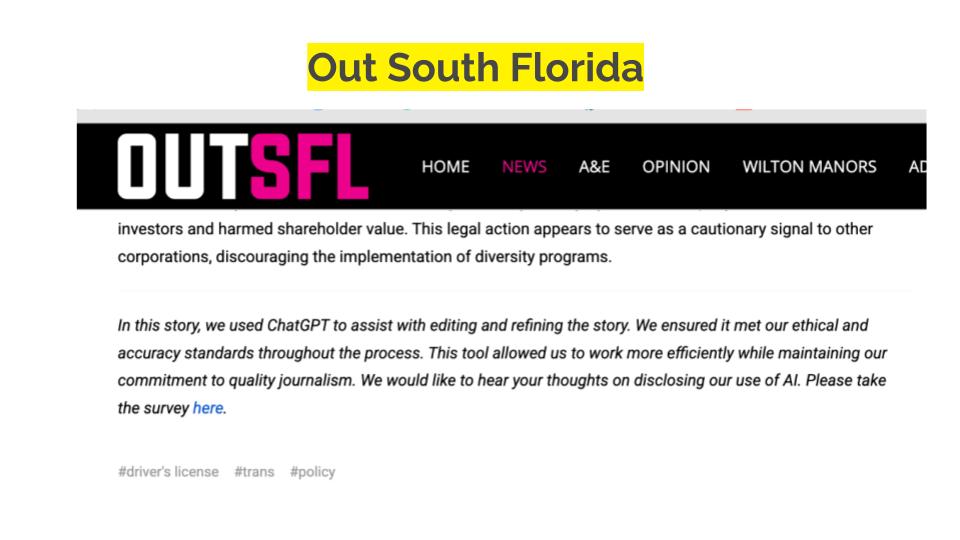

In this story, we used (AI/tool/description of tool) to help us (what AI/the tool did or helped you do). When using (AI/tool), we (fact-checked/made sure it met our ethical/accuracy standards) and (had a human check/review). Using this allowed us to (do more of x, go more in depth, provide content on more platforms, etc). Learn more about our approach to using AI (link to AI policy/AI ethics).

We have created a worksheet to help you complete the Mad Libs-style template above. Need inspiration? View sample text for filling in the template, including what you did with AI and why you used AI in the Trusting News AI Resource: Sample language to include in story-level AI use disclosures.

For even more inspiration, view newsroom AI use disclosure examples below and in this example database.

AI disclosure placement and length matter

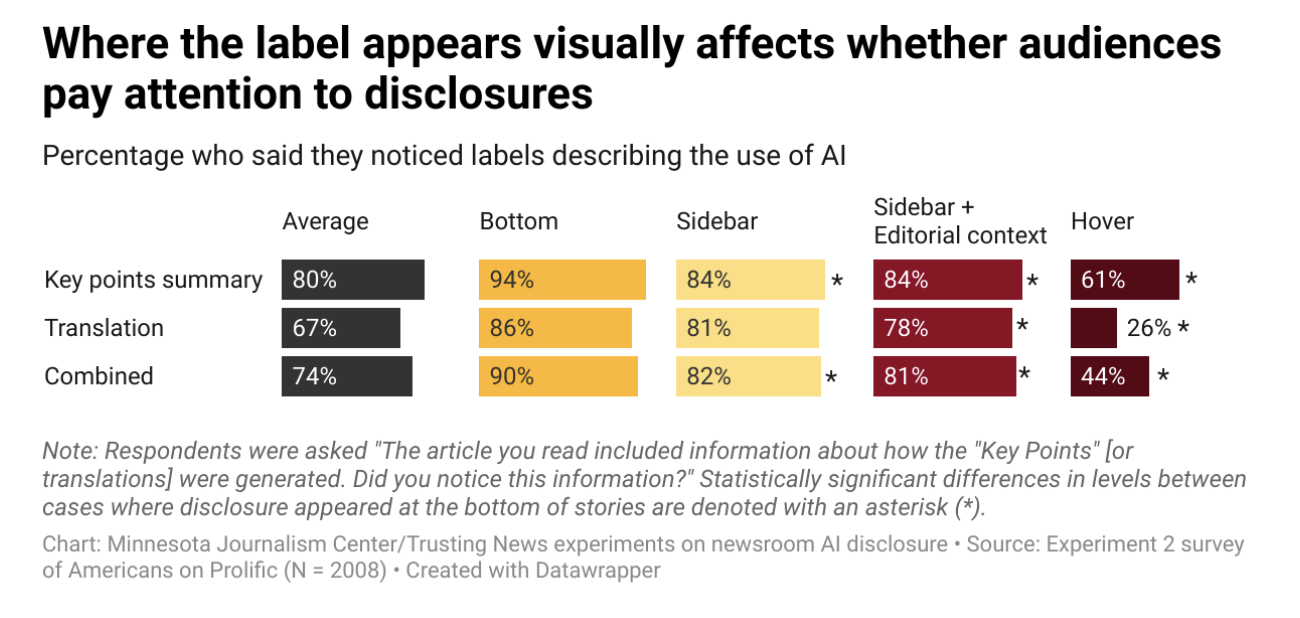

Placement and display of an AI use disclosure may have a greater impact than the language used in the disclosure when it comes to audience attention and response.

In our A/B tests, people were much more likely to notice disclosures that appeared clearly on the story page, like at the bottom of a story or in a sidebar, with noticeability rates over 80%. But when the disclosure only popped up on hover, fewer people saw it. In one case, only 26% noticed it. On average, less than half of respondents noticed hover-only labels, compared to 74% who noticed labels that were immediately visible.

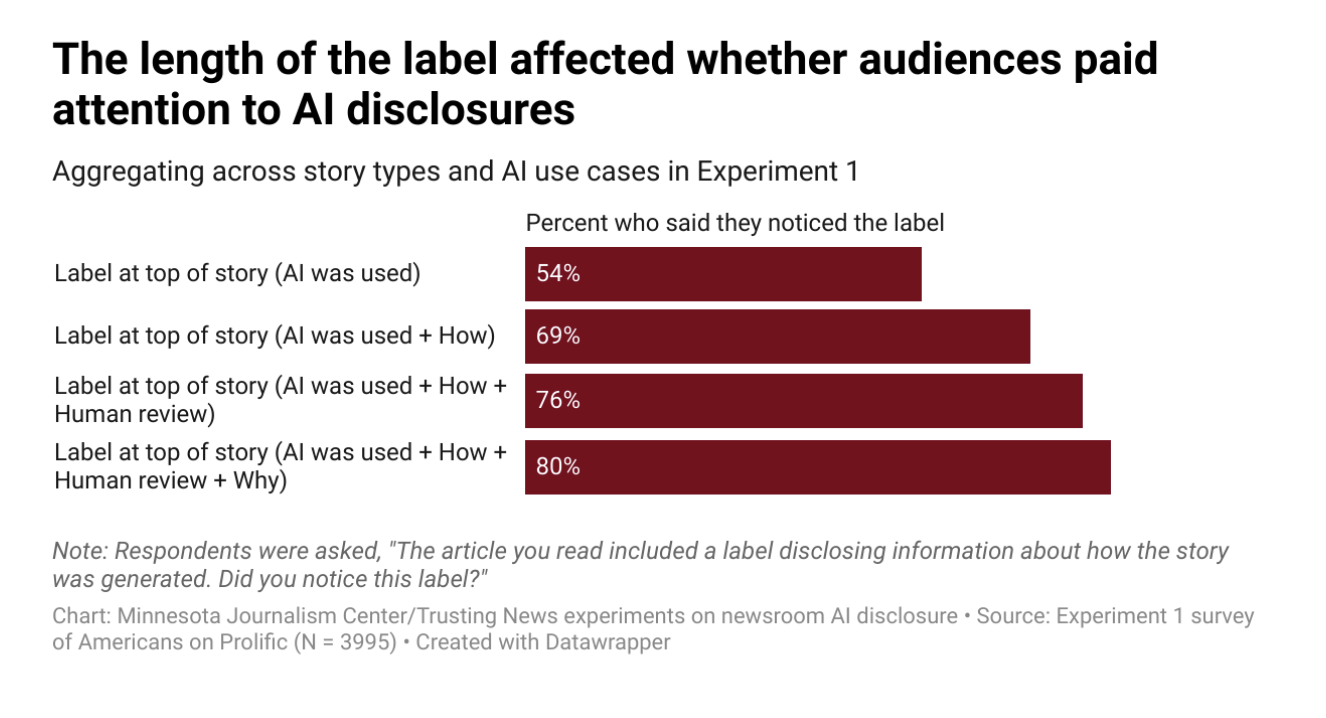

It’s not just about placement though — length and detail matter too. People were more likely to see and remember a disclosure when it included specifics: that AI was used, how and why it was used, and that a human reviewed the content. Simple labels that just said AI was used were easier to overlook.

According to our research, more detailed disclosures appear most effective among those who are otherwise most trusting of news, and it is this same group whose levels of confidence appear most vulnerable to distrust when AI use is disclosed.

The takeaway? Journalists should provide details within in-story disclosures that answer: how AI was used, why AI was used, how a human was involved and how ethics and accuracy were maintained. In addition, consider writing more in-depth explainers or producing content (like videos or social posts) demonstrating your use of AI. Then link to these explanations in your in-story disclosures.

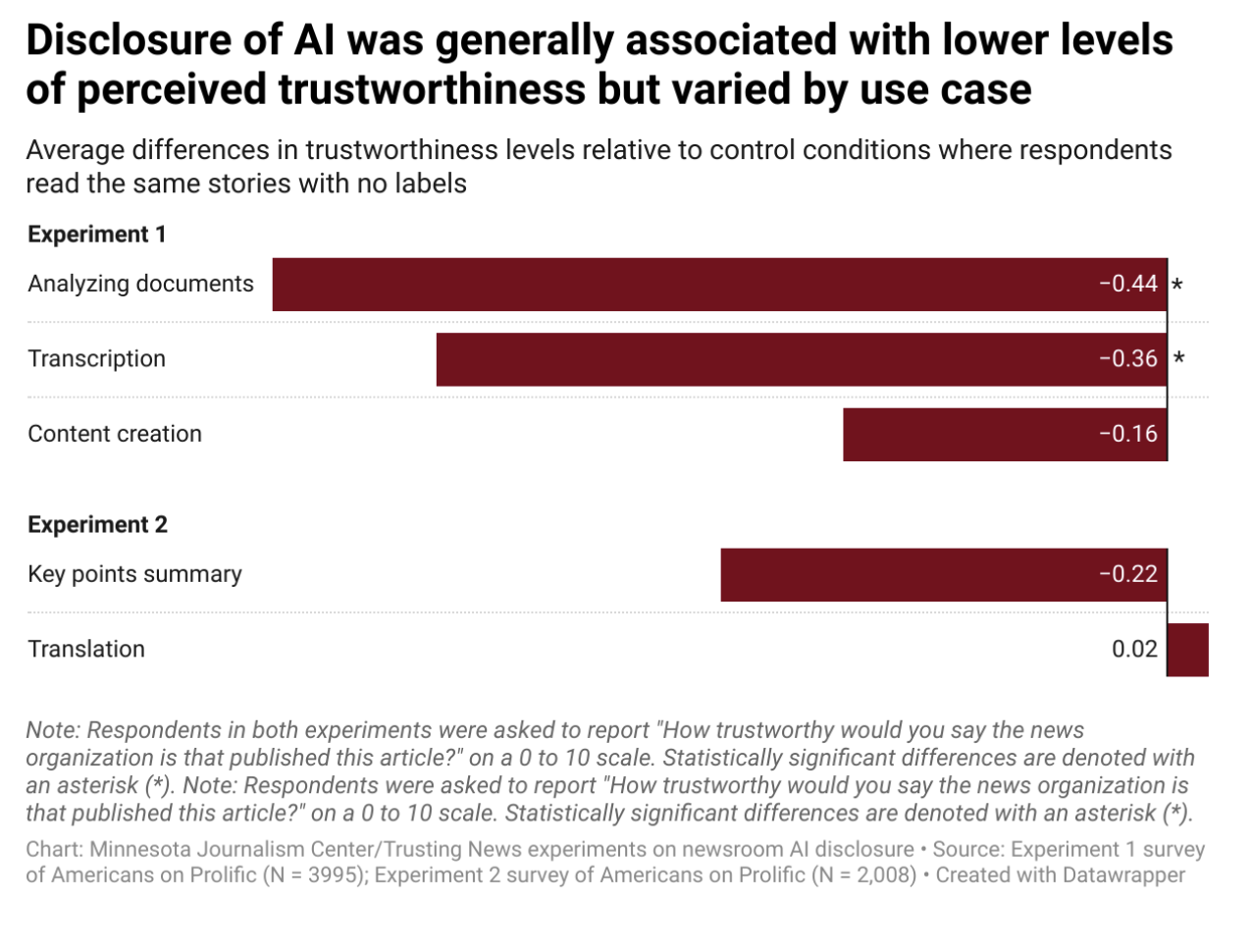

How AI is used matters

Our recent A/B tests studied how people reacted to stories when they saw disclosures about the use of AI compared to people who read the same stories without disclosures. We found, on average, similar decreases in trust for some AI use cases, but not all. The graphic below details the differences reported.

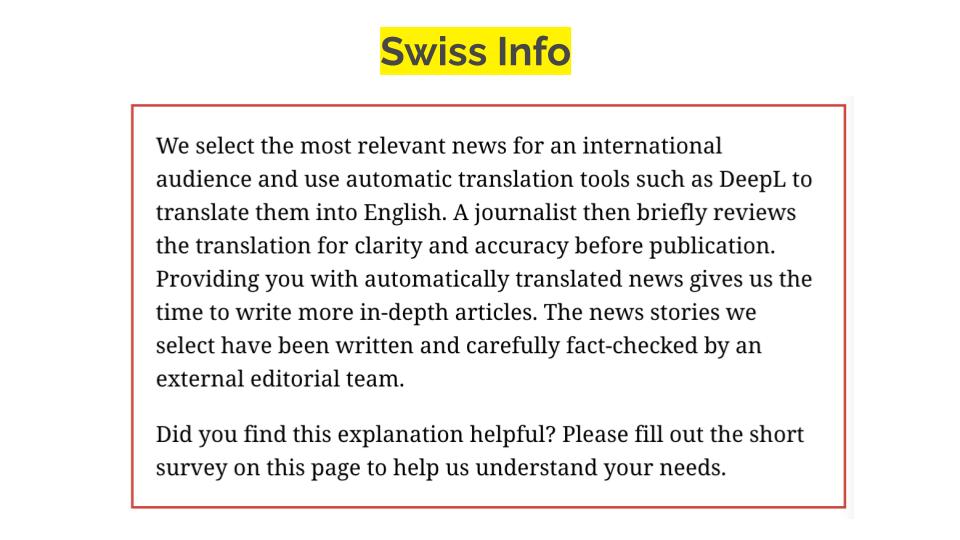

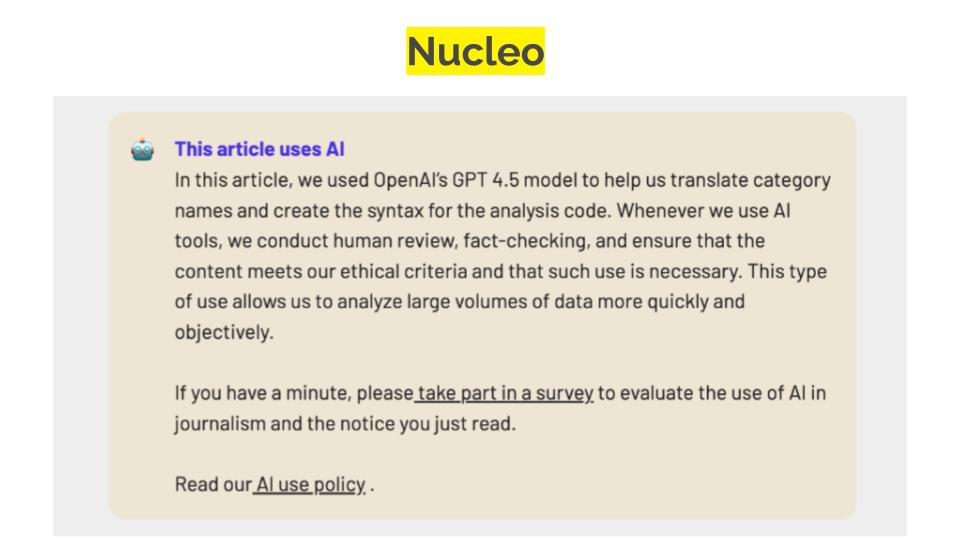

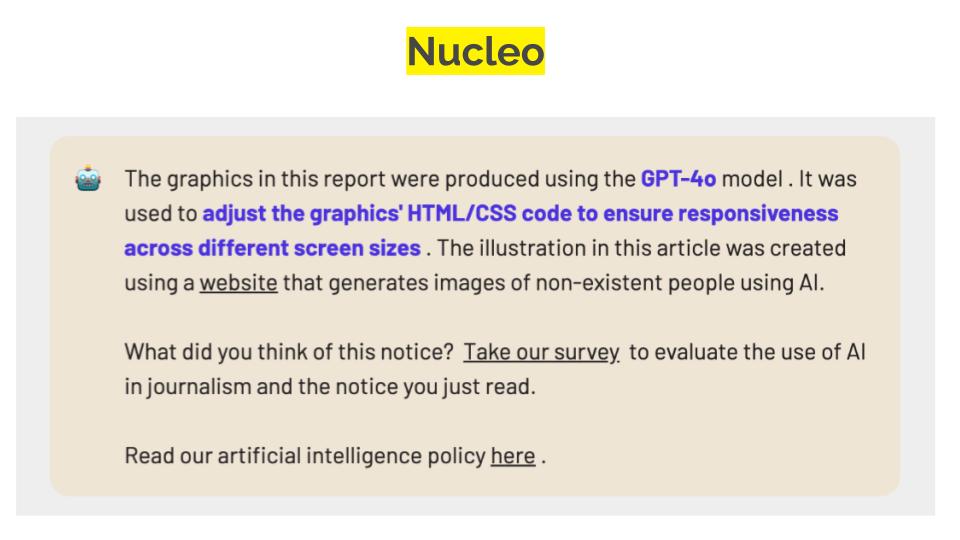

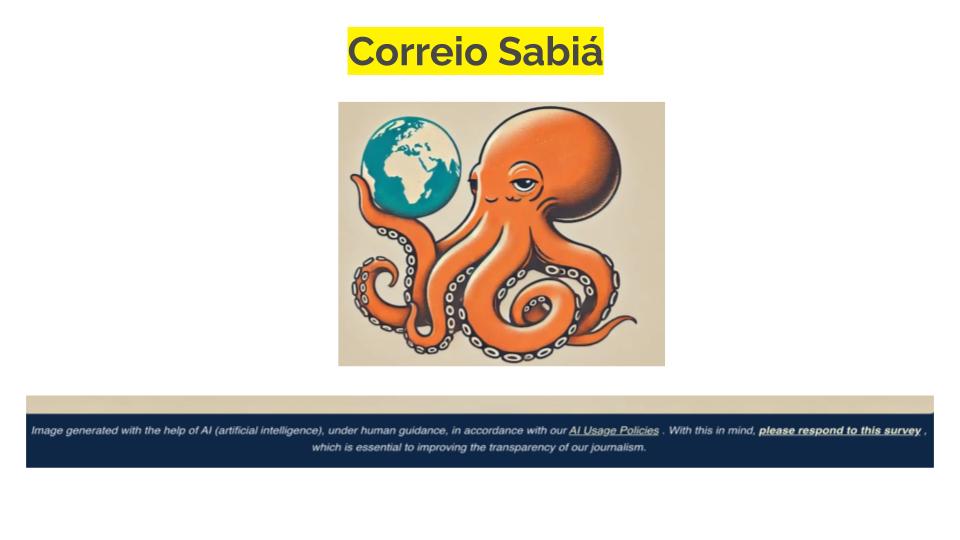

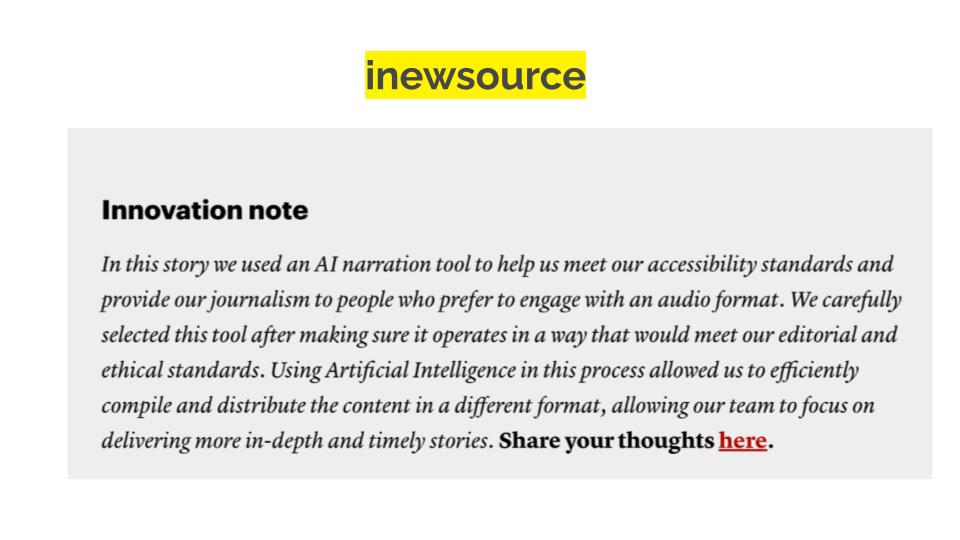

Examples: Click or swipe through the below examples of how newsrooms are getting transparent and disclosing their use of AI. Below you’ll find examples of both AI use policies as well as AI use disclosures. Ready for more? Jump to our full example database. If you have an example of AI use transparency, use this form to share it with us.

How to use AI ethically

Artificial intelligence is a tool in the journalism toolkit, not a replacement for journalists. It cannot substitute for the value, skills and thoughtfulness that human reporters bring.

As we incorporate the use of AI, we have to keep in mind the principles we find in ethics codes like the Society of Professional Journalists’ and our own newsroom’s. We have to be accurate, be fair, be transparent, be independent, and commit to minimizing harm.

Building trust with audiences requires consistent, reliable adherence to our ethics, especially when it comes to new and unfamiliar technologies. Ethical use of AI means carefully considering when to use it, what tools to use and how to use it to assist with or create news content.

In this section, you’ll find resources to help you make informed, ethics-driven decisions about AI use in your journalism, with the goal of maintaining and strengthening trust with your community.

Spotting and disclosing AI in content you don’t control

News consumers overwhelmingly say they want the use of AI to be disclosed. But sometimes you might be unaware if AI was used because the content is coming from a person, vendor or department your newsroom doesn’t have oversight over.

The reality, though, is if a news consumer suspects AI use in content published on your website, in your newsletter, on your social feeds or on air, they will associate that with you — whether it was from an outside vendor or your in-house team.

Use this Trusting News AI Resource: Spotting and disclosing AI in content you don’t control, to ask the right questions, make informed decisions and build trust when AI might have been used in the creation, packaging or distribution of content you don’t fully control. We have guidance for the following situations:

- Freelancers (for publications and for freelancers)

- Partner content (cartoons, crosswords, images, wire services)

- Sources and letters to the editor

- User-generated content

- Advertisers and sponsored content

Resources around AI adoption, ethics and decision-making

Use the following resources to help you make ethical decisions around your use of AI. And if you have a suggestion for a resource to add, use this form to share it with us.

- Poynter’s Ethical AI Guidelines for Journalists

- News/Media Alliance’s AI Principles

- Online News Association’s:

- AI newsroom case studies

- AI trainings and webinars (videos cover how to use tools, ethics, transparency, legal issues to consider and more)

- Partnership on AI’s:

- 10-Step Guide for AI Adoption in Newsrooms

- 5 recommendations for newsrooms adopting AI

- Responsible Practices for Synthetic Media

- Database: AI Tools for local newsrooms

- Associated Press video series on verification (can help with spotting AI use in content for mis/disinformation)

Follow the latest AI research

One part of using AI tools ethically is to understand more about the tools and how the public feels about them. We have dedicated a whole section of this Trust Kit to providing tools for learning what your community thinks about AI and your use of it, and we hope you’ll use them. But it’s also worth paying attention to ongoing research more broadly about public perceptions of AI.

At Trusting News, we are gathering relevant research on this topic here. We are also including links to some of the research we find most valuable for journalists below. If you know of research we should be aware of, use this form to share it with us.

-

- Reuters Digital News Report 2025: How audiences think about news personalization in the age of AI

- Poynter: Audiences are still skeptical about generative AI in news

- Ipsos: People still largely prefer humans to create content, not AI

- Pew Research Center

- 2025 Edelman Trust Barometer

- Trusting News research:

How to educate

Educating the community about AI and technology is one way we believe journalists can build trust with their audience. By demystifying complex technologies and explaining how they’re used in journalism and their community, journalists can foster transparency and understanding. They can also model responsible exploration with tools that can seem intimidating. This education can empower the audience to critically evaluate the role of AI in the world and make decisions about how they use it and want to see it used in their communities.

Survey data: Audiences want AI education

News consumers say they want to be educated about AI and its use and would welcome that education from journalists.

In a Trusting News cohort, more than 80% of the survey respondents said it would be helpful if a newsroom provided information and tips to better understand AI in general and detect when AI was used in content creation. The demand for education around AI became even more clear during the one-on-one interviews.

When asked about education and engagement opportunities around AI, people told the journalists:

- To offer workshops and create guides/glossaries or articles explaining AI’s role in reporting, as it would help people differentiate between ethical AI use and misuse

- To offer opportunities for people to learn about AI’s capabilities and limitations, potentially through outreach events or interactive/Q&A sessions

- To produce more in-depth articles and transparency around AI’s use in journalism

- They appreciated being consulted and wanted newsrooms to continue engaging the public in conversations about AI

Some, particularly those who said they were knowledgeable about AI, were less interested in educational opportunities but said they still believed these efforts could benefit the general public and smaller news organizations.

How journalists can help people understand AI

Based on the majority of people surveyed saying they want to see journalists provide tips to better understand AI, Trusting News would like to see and help newsrooms explore the following opportunities:

- Develop educational resources. Creating guides and articles that explain AI technology, how to detect it and more.

- Host workshops and webinars. Offering sessions where audiences can learn about AI and its role in the information space but also how it can be used in daily life and is being used in other ways throughout the community.

- Host listening events. Continually asking the community about their thoughts on AI use in news coverage, the concerns they have and suggestions on what they want to see and what they would find helpful.

If journalists are seen by their community as a trusted and useful source of information and explanations about AI, it can build a stronger bond between journalists and their community bolstering trust and credibility in the news they deliver. We hope you will explore how to best educate your communities about AI and use resources from the following organizations:

Examples: How journalists are getting transparent around AI

Our example database has dozens of examples of newsroom AI use policies and AI use disclosures. Have a great AI example? Submit it to our database here!