New research shows importance of being specific when disclosing how AI was used

New research: When disclosing use of AI, be specific

Trusting News is excited to announce a new opportunity for newsrooms to participate in our research and experimentation focused on transparency around AI use in journalism. This initiative, made possible by a grant from the Patrick J. McGovern Foundation, will explore At Trusting News, we’ve been exploring how transparency around journalists’ use of AI impacts audience trust. Our first newsroom cohort gathered insights into audience perceptions of AI, where we learned journalists should:

- Disclose the use of AI and find ways to explain why AI was used and how humans reviewed/fact-checked for accuracy and ethical standards

- Engage with their audience on the topic of AI

- Invest in educating their communities about AI

- Practice and show responsible AI use in their news products

Building on that foundation, we then conducted pilot research with Dr. Benjamin Toff and Suhwoo Ahn at The University of Minnesota to understand whether specific words or phrases in public-facing disclosures affect audience comfort levels.

How the research was done: The pilot study involved collecting survey responses from a quasi-representative sample of 2,000 Americans in which they were asked to rate how comfortable or uncomfortable they were with different hypothetical use cases of AI in journalism, where the descriptions of how AI was used, why it was used, and what degree of human oversight was included, were randomly varied. This randomized message-testing allowed us to assess how small tweaks in language could elicit different reactions from the public while helping to setup a further round of experimentation with labeling in the coming months.

Here’s what we learned

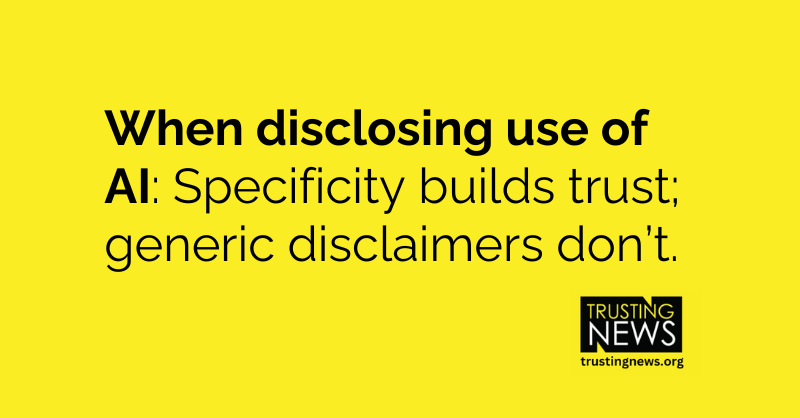

1. Specificity matters most.

- Audiences respond better to detailed disclosures about how AI is being used in the newsroom. Generic disclaimers were viewed less favorably and garnered less trust.

- Word choice matters less. Audiences want to know specifically what Al was used to do, but the words journalists use in the description seem less important.

- When getting specific about AI’s role, it is better to focus on how it improves news quality or quality of overall experience/content for the user over quantity (allowing for more to be done, content to be create for content sake, etc.)

2. Comfort varies by task.

- Audiences are more comfortable with AI being used for tasks like transcription or translation than for data analysis.

- Despite enthusiasm among journalists for using AI in investigative or data-heavy reporting, audiences express hesitation about those uses unless they clearly understand what role AI played in the process.

3. “AI” vs. “automatic tool” terminology.

- Referring to AI as “AI” versus using terms like “automatic tool” makes little difference in audience perceptions.

What this means for journalists

When disclosing AI use, focus on precision over generic language. Explain exactly how AI contributed to your work and emphasize its role in enhancing quality or creating content your audience values.

For example:

- Instead of: “AI was used to allow for more investigative reporting.”

- Try: “AI was used to quickly review public records or other data obtained while reporting.”

Transparency doesn’t mean overloading your audience with technical details. Instead, share enough to demystify the process and highlight the human judgment behind the work.

Remember, we have sample disclosure language you can use. We are suggesting it based on other trust strategies focused on transparency, specifically our work on which language works best to build trust when explaining reporting goals, mission or process:

Sample disclosure: In this story we used (AI/tool/description of tool) to help us (what AI/the tool did or helped you do). When using (AI/tool) we (fact-checked, had a human check, made sure it met our ethical/accuracy standards) Using this allowed us to (do more of x, go more in depth, provide content on more platforms).

See more examples of what this transparency language could look like in our AI Trust Kit.

What’s next: Apply to join our paid cohort

These findings will inform our ongoing research as we work with our second newsroom cohort to test in-story disclosures. We are looking for 10 newsrooms to join this second cohort. Teams of two or three people will participate from each newsroom. Participants can be from any role in the newsroom, as long as you have some involvement in decisions about AI use. (This opportunity is not a good fit for freelancers.)

Each participating newsroom will receive $2,000 upon completing the program, which will run from February 3 to April 30, 2025. Find more details about the AI cohort here and apply here.

We also will be answering questions about this opportunity on Thursday at 3 p.m. EST. Register for the Q&A here.

At Trusting News, we learn how people decide what news to trust and turn that knowledge into actionable strategies for journalists. We train and empower journalists to take responsibility for demonstrating credibility and actively earning trust through transparency and engagement. Learn more about our work, vision and team. Subscribe to our Trust Tips newsletter. Follow us on Twitter and LinkedIn.

Assistant director Lynn Walsh (she/her) is an Emmy award-winning journalist who has worked in investigative journalism at the national level and locally in California, Ohio, Texas and Florida. She is the former Ethics Chair for the Society of Professional Journalists and a past national president for the organization. Based in San Diego, Lynn is also an adjunct professor and freelance journalist. She can be reached at lynn@TrustingNews.org and on Twitter @lwalsh.