Not being transparent about your use of AI could be worse and goes against journalistic ethical standards

Disclose AI use (even if it hurts trust)

We’re excited to share we have new research about how audiences are responding to AI use in news. The latest findings have led to the development of new resources to help you build trust with your use of AI, which you can check out in our AI Trust Kit.

Over the next three weeks in this Trust Tips newsletter, we’ll continue to highlight tips for how to build trust with your use of AI based on this new research. Last week we shared our first tip: Engage with your audience before, during and after you use AI.

This week, we are talking about transparency. Why? News consumers continue to tell newsrooms they want journalists to disclose their use of AI.

Transparency: What news consumers say they want

Previous Trusting News research found that an overwhelming majority (93.8%) of news consumers said they want the use of AI to be disclosed.

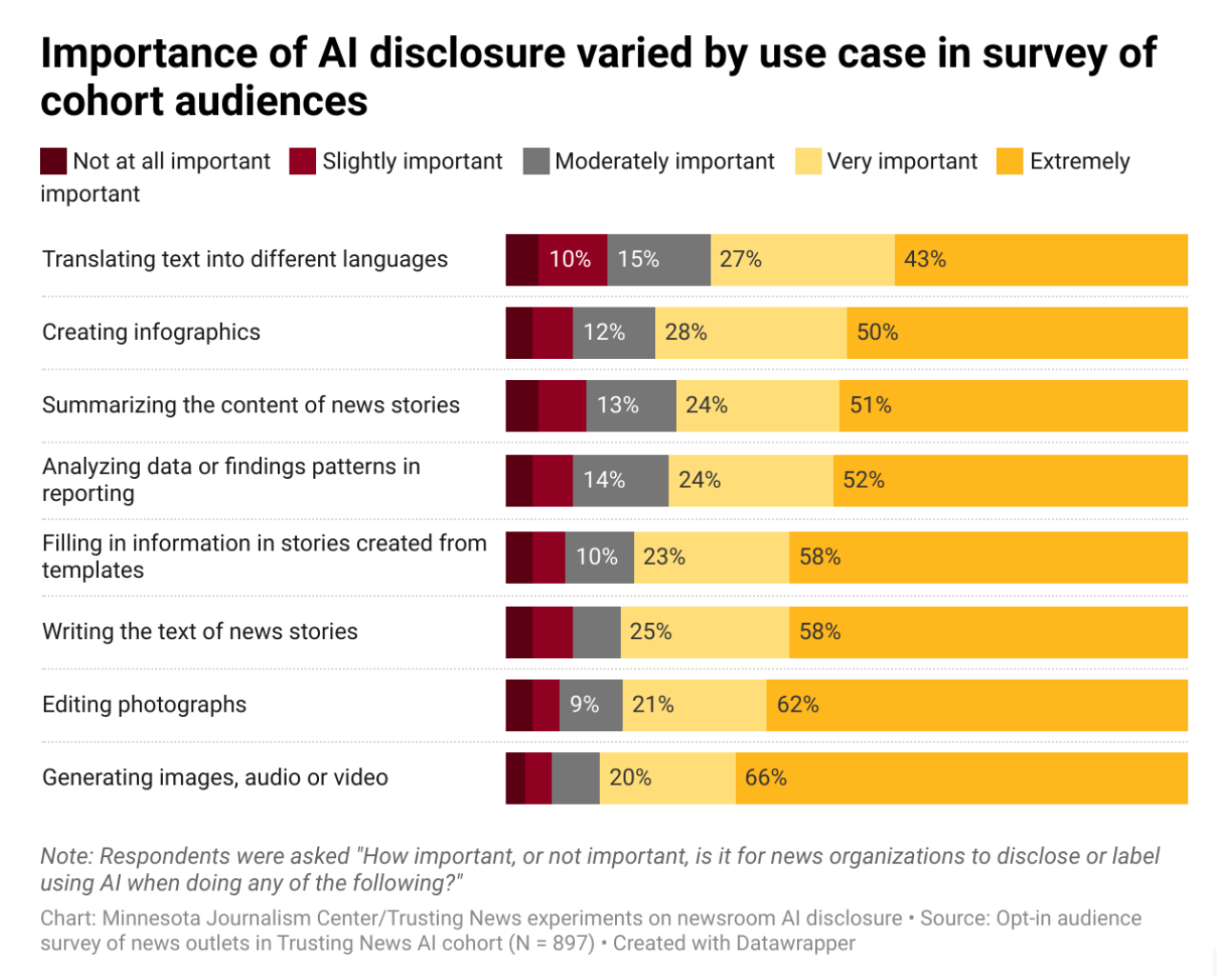

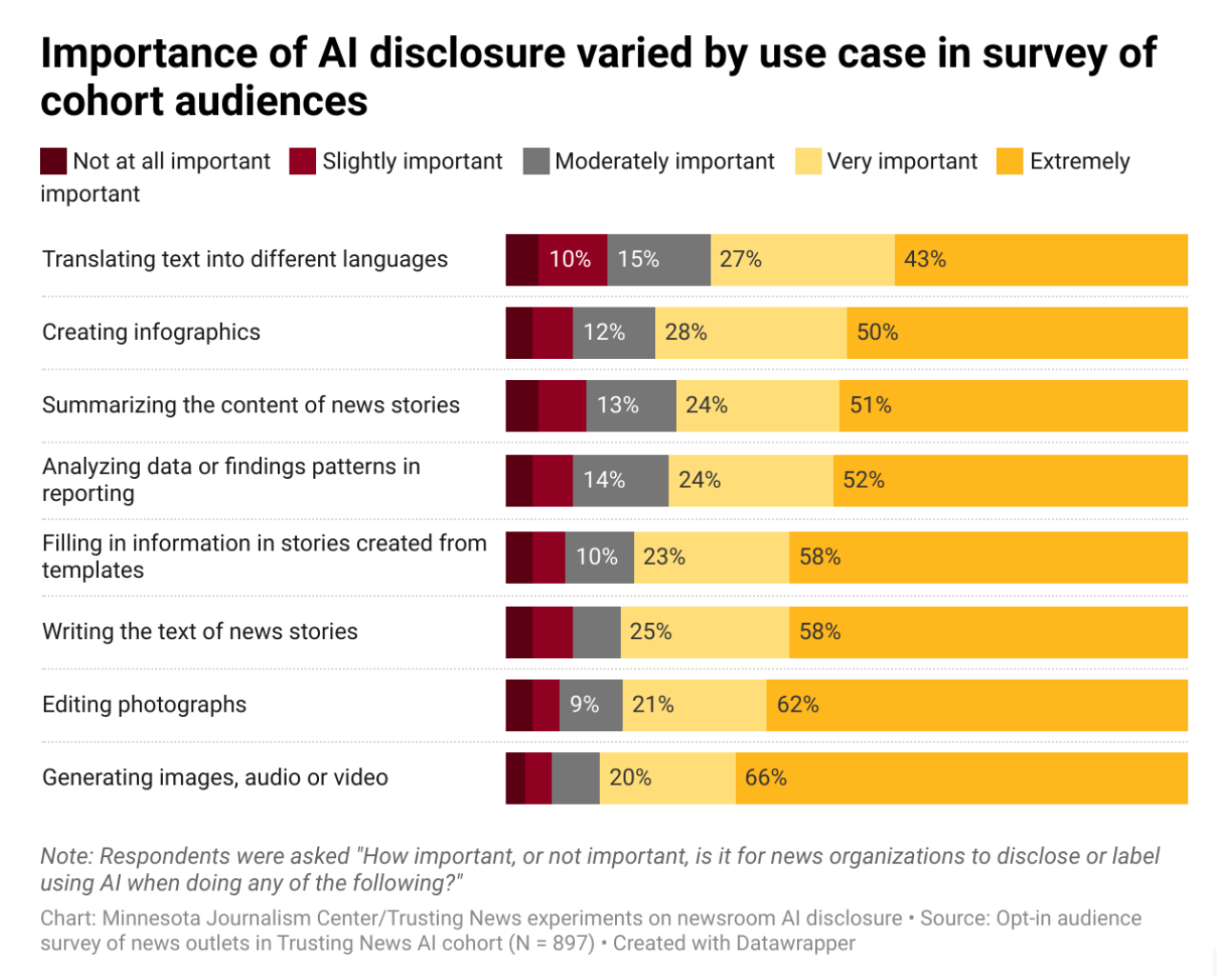

In our latest research, the only scenario where most people didn’t consider disclosure “extremely important” was when AI was used for translation — and even then, 43% of people still wanted that disclosed as well

How news consumers respond to transparency

News consumers are telling us they want disclosure and transparency. What happens when journalists give it to them?

That’s what we set out to find in this new round of research alongside 10 partner newsrooms. The newsrooms wrote personalized AI use disclosures and added them to stories where AI was used. At the end of the disclosure language, the newsrooms included language inviting news consumers to take a survey about their AI use.

Here’s what we learned from those survey results:

- After seeing an AI-use disclosure, 42% of respondents said they were less likely to trust the story, while 30% said they were more likely.

- Younger people and those already distrusting of news in general were more likely to report a negative impact on trust from AI use labels

- People who use AI more in their daily lives had a more positive response to newsroom AI use disclosures

- People said they wanted more information about “how” and “why” AI was used and about “human oversight” over the use of AI.

Read more about the experiment, how it worked, and find more in-depth analysis of the results in this research post.

The trust paradox

While it’s true the surveys show a majority of people trusted a specific news story less when they saw a disclosure, other research conducted using A/B testing paints a slightly more nuanced picture.

People have strong feelings about AI, and our research shows the fact AI was used at all seems to matter — and elicit strong (often) negative feelings about the technology — more than any explanation.

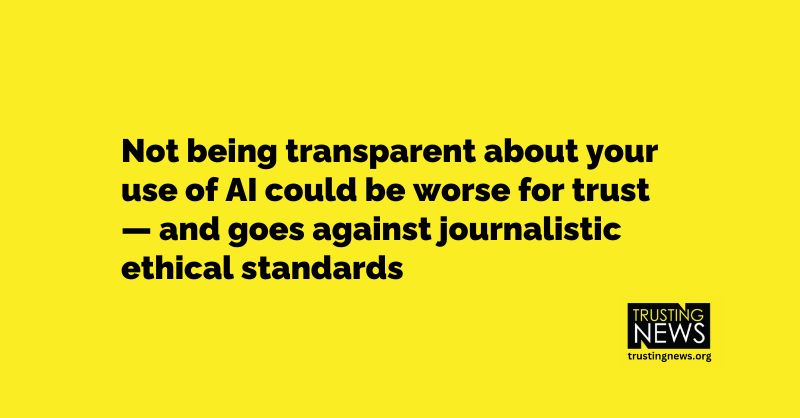

Still, transparency matters and should be prioritized. In part because the message from news consumers is clear: They continue to tell us they want disclosure. But also this level of transparency is ethically aligned with journalism — an analysis of the most widely used journalism ethics codes would support journalists getting transparent about the use of AI in their news content. And hiding AI use could damage trust even more than being transparent.

How to disclose your use of AI

While this research might tempt journalists to use AI and not say anything in hopes people don’t notice or inquire about it, journalists should instead lean toward transparency in their AI use.

Here’s how:

- Have a public-facing AI use policy. All newsrooms should get on the record about how they are (or are not) using AI. An editor’s column or an FAQ can be a less formal way to share your process. Picture a headline like “How our newsroom is exploring AI in our work.” Use language like “we’re asking questions” or “we’re exploring.” Say “we typically” or “we won’t usually” rather than “we always” or “we never.” Use this Trusting News AI Worksheet to create an AI use policy based on experimentation and user trust. If you want a more in-depth and formal guide, Poynter has extensive AI ethics guidelines and sample policy language.

- Include in-story AI use disclosures that emphasize commitment to accuracy/ethics. When disclosing AI use in individual stories and news content, newsrooms must explain how the content can still be trusted to be accurate and ethical. This new research shows just how important it is to include this information and find ways to emphasize it to your audience.

- Include in-story AI use disclosures that emphasize human involvement. News consumers, especially the most engaged and loyal news consumers, are worried that AI will lead to lower quality news content, mis/disinformation and journalism job loss. To help ease these concerns, explain how humans — real, live journalists — are making sure that doesn’t happen.

Not sure how to write a disclosure? We updated our Mad Libs-style template to help.

In this story, we used (AI/tool/description of tool) to help us (what AI/the tool did or helped you do). When using (AI/tool), we (fact-checked/made sure it met our ethical/accuracy standards) and (had a human check/review). Using this allowed us to (do more of x, go more in depth, provide content on more platforms, etc). Learn more about our approach to using AI (link to AI policy/AI ethics).

If you are looking for more support, we have a worksheet to help you complete the Mad Libs-style template above and wrote up some sample language you can include in your AI disclosures. For even more inspiration, view newsroom AI use disclosure examples below and in this example database.

We also think, at this point in time, disclosing the use of AI may not alone be enough to build trust. People want transparency around the use of these tools and they seem to always want more information to come with that. Also, for some audiences, any association with using AI is viewed as a red flag, and many are still learning about what these tools can do and what they can’t do. Journalists need to consider further explanations with behind-the-scenes explainers and AI literacy initiatives. More on that next week!

Resources to help you build trust with your use of AI

We’re excited to share we have new research about how audiences are responding to AI use in news. The latest findings have led to the development of new resources to help you build trust with your use of AI, which you can check out in our AI Trust Kit.

Over the next three weeks in this Trust Tips newsletter, we’ll continue to highlight tips for how to build trust with your use of AI based on this new research. Last week we shared our first tip: Engage with your audience before, during and after you use AI.

This week, we are talking about transparency. Why? News consumers continue to tell newsrooms they want journalists to disclose their use of AI.

Transparency: What news consumers say they want

Previous Trusting News research found that an overwhelming majority (93.8%) of news consumers said they want the use of AI to be disclosed.

In our latest research, the only scenario where most people didn’t consider disclosure “extremely important” was when AI was used for translation — and even then, 43% of people still wanted that disclosed as well

How news consumers respond to transparency

News consumers are telling us they want disclosure and transparency. What happens when journalists give it to them?

That’s what we set out to find in this new round of research alongside 10 partner newsrooms. The newsrooms wrote personalized AI use disclosures and added them to stories where AI was used. At the end of the disclosure language, the newsrooms included language inviting news consumers to take a survey about their AI use.

Here’s what we learned from those survey results:

- After seeing an AI-use disclosure, 42% of respondents said they were less likely to trust the story, while 30% said they were more likely.

- Younger people and those already distrusting of news in general were more likely to report a negative impact on trust from AI use labels

- People who use AI more in their daily lives had a more positive response to newsroom AI use disclosures

- People said they wanted more information about “how” and “why” AI was used and about “human oversight” over the use of AI.

Read more about the experiment, how it worked, and find more in-depth analysis of the results in this research post.

The trust paradox

While it’s true the surveys show a majority of people trusted a specific news story less when they saw a disclosure, other research conducted using A/B testing paints a slightly more nuanced picture.

People have strong feelings about AI, and our research shows the fact AI was used at all seems to matter — and elicit strong (often) negative feelings about the technology — more than any explanation.

Still, transparency matters and should be prioritized. In part because the message from news consumers is clear: They continue to tell us they want disclosure. But also this level of transparency is ethically aligned with journalism — an analysis of the most widely used journalism ethics codes would support journalists getting transparent about the use of AI in their news content. And hiding AI use could damage trust even more than being transparent.

How to disclose your use of AI

While this research might tempt journalists to use AI and not say anything in hopes people don’t notice or inquire about it, journalists should instead lean toward transparency in their AI use.

Here’s how:

- Have a public-facing AI use policy. All newsrooms should get on the record about how they are (or are not) using AI. An editor’s column or an FAQ can be a less formal way to share your process. Picture a headline like “How our newsroom is exploring AI in our work.” Use language like “we’re asking questions” or “we’re exploring.” Say “we typically” or “we won’t usually” rather than “we always” or “we never.” Use this Trusting News AI Worksheet to create an AI use policy based on experimentation and user trust. If you want a more in-depth and formal guide, Poynter has extensive AI ethics guidelines and sample policy language.

- Include in-story AI use disclosures that emphasize commitment to accuracy/ethics. When disclosing AI use in individual stories and news content, newsrooms must explain how the content can still be trusted to be accurate and ethical. This new research shows just how important it is to include this information and find ways to emphasize it to your audience.

- Include in-story AI use disclosures that emphasize human involvement. News consumers, especially the most engaged and loyal news consumers, are worried that AI will lead to lower quality news content, mis/disinformation and journalism job loss. To help ease these concerns, explain how humans — real, live journalists — are making sure that doesn’t happen.

Not sure how to write a disclosure? We updated our Mad Libs-style template to help.

In this story, we used (AI/tool/description of tool) to help us (what AI/the tool did or helped you do). When using (AI/tool), we (fact-checked/made sure it met our ethical/accuracy standards) and (had a human check/review). Using this allowed us to (do more of x, go more in depth, provide content on more platforms, etc). Learn more about our approach to using AI (link to AI policy/AI ethics).

If you are looking for more support, we have a worksheet to help you complete the Mad Libs-style template above and wrote up some sample language you can include in your AI disclosures. For even more inspiration, view newsroom AI use disclosure examples below and in this example database.

We also think, at this point in time, disclosing the use of AI may not alone be enough to build trust. People want transparency around the use of these tools and they seem to always want more information to come with that. Also, for some audiences, any association with using AI is viewed as a red flag, and many are still learning about what these tools can do and what they can’t do. Journalists need to consider further explanations with behind-the-scenes explainers and AI literacy initiatives. More on that next week!

Resources to help you build trust with your use of AI

We have a lot of *NEW* resources and tools to make this work easier, including surveys to help you listen and understand what your audience thinks about AI, worksheets and templates to help you get transparent about AI, and resources and guidelines to help you be ethical.

You can find all these resources in our AI Trust Kit.At Trusting News, we learn how people decide what news to trust and turn that knowledge into actionable strategies for journalists. We train and empower journalists to take responsibility for demonstrating credibility and actively earning trust through transparency and engagement. Learn more about our work, vision and team. Subscribe to our Trust Tips newsletter. Follow us on Twitter, BlueSky and LinkedIn.

Assistant director Lynn Walsh (she/her) is an Emmy award-winning journalist who has worked in investigative journalism at the national level and locally in California, Ohio, Texas and Florida. She is the former Ethics Chair for the Society of Professional Journalists and a past national president for the organization. Based in San Diego, Lynn is also an adjunct professor and freelance journalist. She can be reached at lynn@TrustingNews.org and on Twitter @lwalsh.