Not being transparent about your use of AI could be worse and goes against journalistic ethical standards

Go beyond AI use disclosures and policies to build trust

We know people are skeptical about journalists using AI. That’s why, in the past few weeks of Trust Tips, we’ve been sharing highlights from our new research showing how journalists can build trust with their use of AI.

- We started with the foundational step of engaging and listening to your audience before, during and after you use AI.

- Then we walked through how and why to disclose your AI use (even if it hurts trust).

This week, we are focusing on transparency again. Remember: Our AI research shows people want transparency around the use of these tools, and they seem to always want more detailed information alongside that transparency.

Also, for some audiences, any association with using AI is viewed as a red flag, and many are still learning about what these tools can and can’t do.

For these reasons, we believe journalists need to do more than have a public-facing AI use policy and in-story AI use disclosures. We think experimenting with other transparency initiatives and AI literacy may help the trust paradox of AI use disclosures we discussed in last week’s newsletter (a majority of people trusted a specific news story less when they saw a disclosure).

What does this look like?

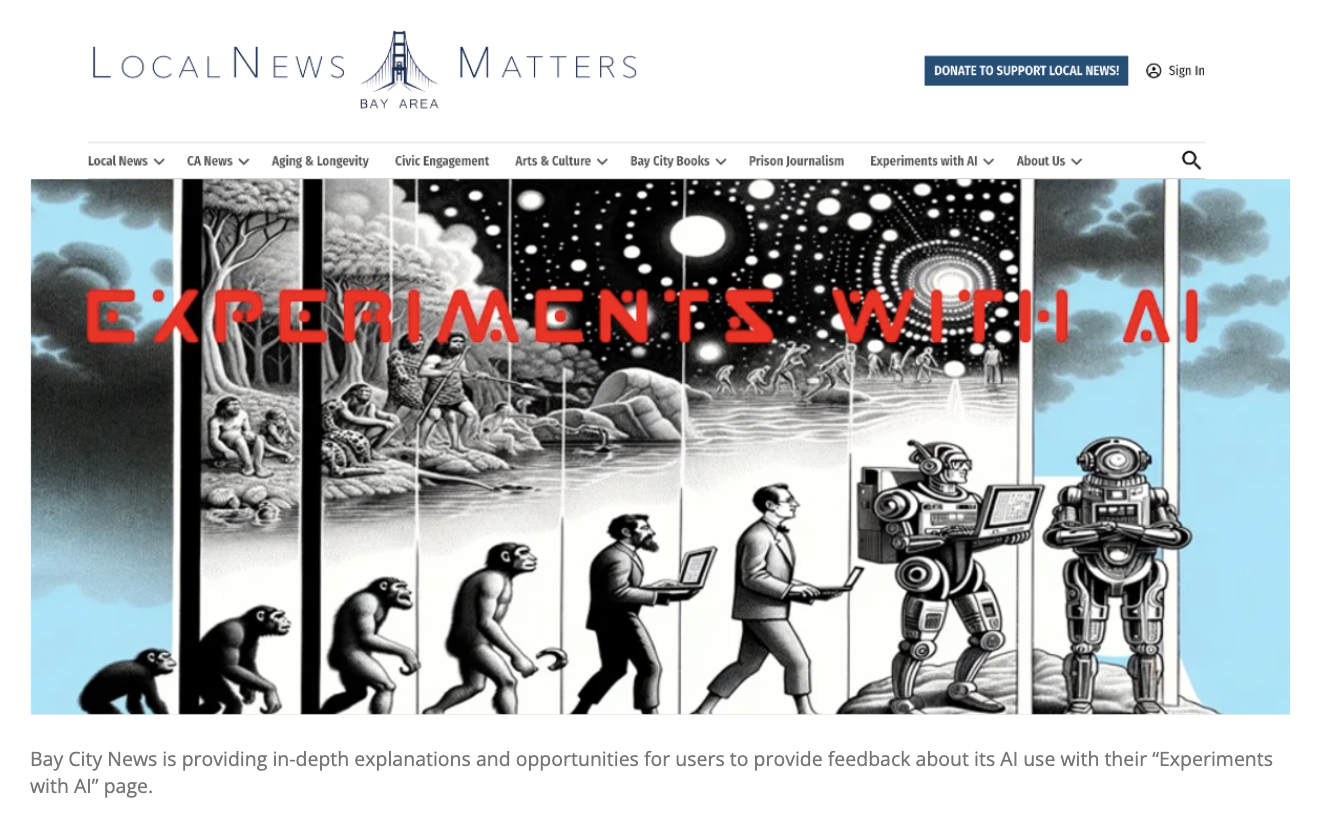

One newsroom leaning into more transparency around the use of AI is Bay City News Foundation.

On its “Experiments with AI” page, the newsroom shares updates and details about its AI experimentation so audiences can learn alongside them. The site introduces various test projects, including an AI‑assisted news roundup podcast and AI-assisted cartoons, while inviting public feedback.

The “experiments” page aims to demystify AI, reflect on ethical challenges and uphold journalism values like accuracy, trust and community input.

Bay City News is providing in-depth explanations and opportunities for users to provide feedback about its AI use with their “Experiments with AI” page.

Some of the page elements include:

- A section highlighting news stories about AI. The newsroom is highlighting how AI is being used in their community — and it’s not all doom and gloom. If we want to build trust (or at least not lose it) through our use of AI, journalists should examine how they are covering AI in news stories. If all (or most) of our coverage of AI focuses on potential dangers, but then we use the tools ourselves, why would we expect people to automatically trust our own AI use? We should continue to be critical and focus on accountability, but unless we’re also offering explanations and responsible use cases, are we really providing complete context and fair coverage? At the very least, we should highlight how these tools are benefiting our work and share how we are approaching our use of them with an ethical lens. By being transparent about both the advantages and challenges, we can allow the public to learn from our experiences and make more informed decisions about their own use of AI.

- A regular ask for feedback. The page includes a link to a survey for people to provide feedback to the newsroom about their explanation of their AI use. Regularly asking for feedback about your AI use and how you’re transparent about that use helps you understand how your audience feels, and whether you should adjust or expand the information you provide. You will only be able to find out if your audience understands your explanations or wants more information by asking them. (Copy our pre-made survey if you want help asking your audience for feedback.)

- In-depth explanations about their use of AI. The news team explains how they are using AI in detail. These explanations have dedicated posts and updates about the work, including explanations about what worked well and what was challenging. For their election results explanation, they even provide a playbook to guide other newsrooms through how to duplicate the work. For the cartooning experimentation, you hear firsthand from their cartoonist, Joe Dworetzky. He walks users through his process of experimenting with a variety of AI tools to assist in creating cartoons.

Are you experimenting with more in-depth transparency initiatives around your use of AI? Let me know! We are collecting examples and would love to see yours. Use this form to share it with us or feel free to get in touch with me: Lynn@TrustingNews.org.

Apply: NEW AI cohort + innovation grants focused on AI education

Another way journalists may be able to be more transparent with their audiences about AI is through investing in AI education and literacy in their communities. That’s why we’re looking for newsrooms that want to experiment with educating their communities about AI. Not just its use in news, but how it works, how the public can spot it and more.

There are two ways for newsrooms to get involved:

- A paid cohort to experiment with sharing AI education resources with your community (Deadline to apply: August 22)

- $5,000 innovation grants for newsrooms that want to explore an idea to educate their community about AI (Deadline to apply: August 29)

Find all the details and the application here.

Resources to help you build trust with your use of AI

We have a lot of NEW resources and tools to make this work easier, including resources to help you listen and understand what your audience thinks, resources to help you get transparent about AI, and resources to help you be ethical. You can find all these resources in our AI Trust Kit.

.At Trusting News, we learn how people decide what news to trust and turn that knowledge into actionable strategies for journalists. We train and empower journalists to take responsibility for demonstrating credibility and actively earning trust through transparency and engagement. Learn more about our work, vision and team. Subscribe to our Trust Tips newsletter. Follow us on Twitter, BlueSky and LinkedIn.

Assistant director Lynn Walsh (she/her) is an Emmy award-winning journalist who has worked in investigative journalism at the national level and locally in California, Ohio, Texas and Florida. She is the former Ethics Chair for the Society of Professional Journalists and a past national president for the organization. Based in San Diego, Lynn is also an adjunct professor and freelance journalist. She can be reached at lynn@TrustingNews.org and on Twitter @lwalsh.